Human–AI Co‑Evolution and Agentic Research

Exploring a new research and education paradigm built around human–AI co‑evolution and agentic collaboration

We are exploring a new research and education paradigm built around human–AI co‑evolution and agentic collaboration. Instead of treating AI as a simple tool, we envision a future in which human scientists and specialized AI agents evolve together. Human researchers remain at the center, setting visionary goals and making key decisions, while AI agents assist with literature analysis, hypothesis generation and task management. This synergy aims to break through the bottlenecks of traditional hierarchical research structures and accelerate innovation across disciplines. The following sections outline the core principles, technical frameworks and educational initiatives.

Part 1: Human–AI Co‑Evolution — Redefining Research

Our Objective:

Current AI applications often focus on automation or substitution, yet real breakthroughs require tight coupling between human creativity and machine intelligence. In our vision, human–AI co‑evolution becomes a driving force: humans and AI agents continually influence each other, forming an iterative feedback loop. Such co‑evolution implies that AI algorithms learn from human choices, and the resulting AI suggestions reshape subsequent human decisions. By delegating literature mining, data curation and preliminary simulations to AI, human researchers can focus on high‑level reasoning, critical thinking and validation.

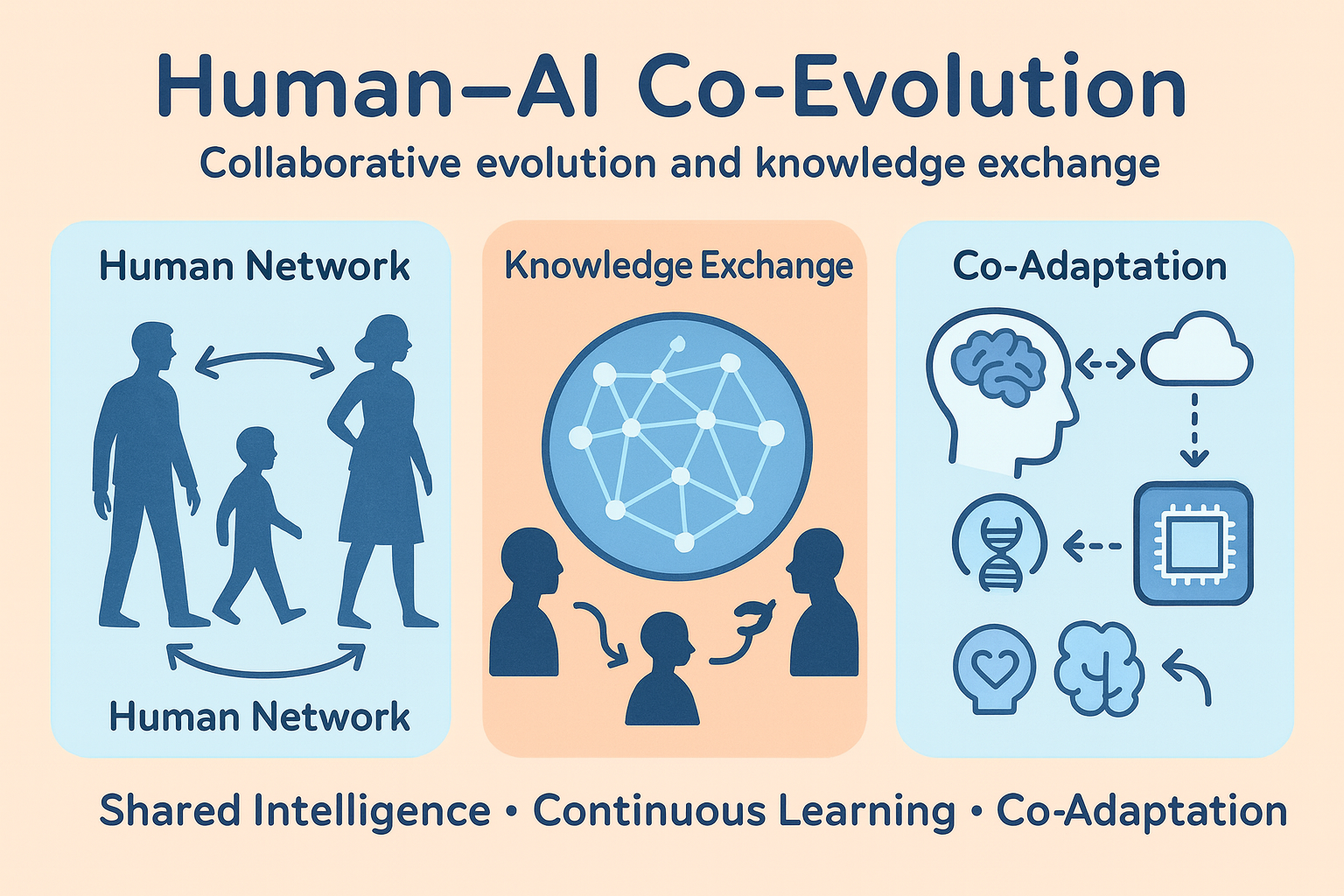

Figure 1: Conceptual illustration of human–AI co‑evolution. Human silhouettes are connected to glowing AI nodes, symbolizing collaborative evolution and knowledge exchange.

Core Principles:

- Human‑AI co‑evolution: humans and AI algorithms continuously influence each other; AI assists in information processing and pattern discovery, while humans provide creativity, intuition and final judgement.

- Task decomposition via AutoLab : complex research goals are broken into interrelated sub‑tasks. Each sub‑task can be assigned to appropriate execution units—human scientists, their dedicated AI assistants or specialized AI agents—enabling distributed and parallel progress.

- Empowerment, not replacement: AI agents are viewed as powerful collaborators, not substitutes. They handle literature summarization, data analysis and idea generation, leaving strategic planning and critical decisions to human researchers.

- Interdisciplinary collaboration: by making tasks modular and explicit, AutoLab makes it easy to integrate expertise across laboratories and disciplines, enabling cross‑lab and even cross‑institutional collaboration.

Research Directions:

- Protocol design: formalizing human‑AI collaboration through related frameworks that specify roles, interfaces and hand‑off points between humans and AI agents.

- AI Enhanced Institute Protocol: developing institutional guidelines that describe how AI agents support research, including norms for task assignment, progress tracking and evaluation.

- Executable Task Graph Protocol: creating a structured task graph that connects sub‑tasks and assigns them dynamically to suitable agents. This graph becomes the backbone of the agentic research network.

- Human‑centred evaluation: ensuring that AI‑generated outputs are always validated by humans, thereby maintaining scientific rigor and ethical standards.

Part 2: AI‑Enhanced Research Institution — Multi‑Agent Collaboration

Our Objective:

Traditional research institutions often rely on hierarchical information flow from principal investigators to senior researchers, junior researchers and students. This structure hampers flexibility and slows down knowledge dissemination. To overcome these limitations, we propose an AI‑Enhanced Institute, powered by a network of specialized AI agents that collaborate with humans. Google’s AI co‑scientist demonstrates how a multi‑agent system can serve as a virtual scientific collaborator: built on Gemini 2.0, it helps researchers generate novel hypotheses and research proposals. The system leverages a coalition of specialized agents—Generation, Reflection, Ranking, Evolution, Proximity and Meta‑review—that iteratively generate, evaluate and refine hypotheses. Inspired by such advances, we aim to build an agentic research network in which specialized AI agents communicate, allocate resources and evolve together with human scientists.

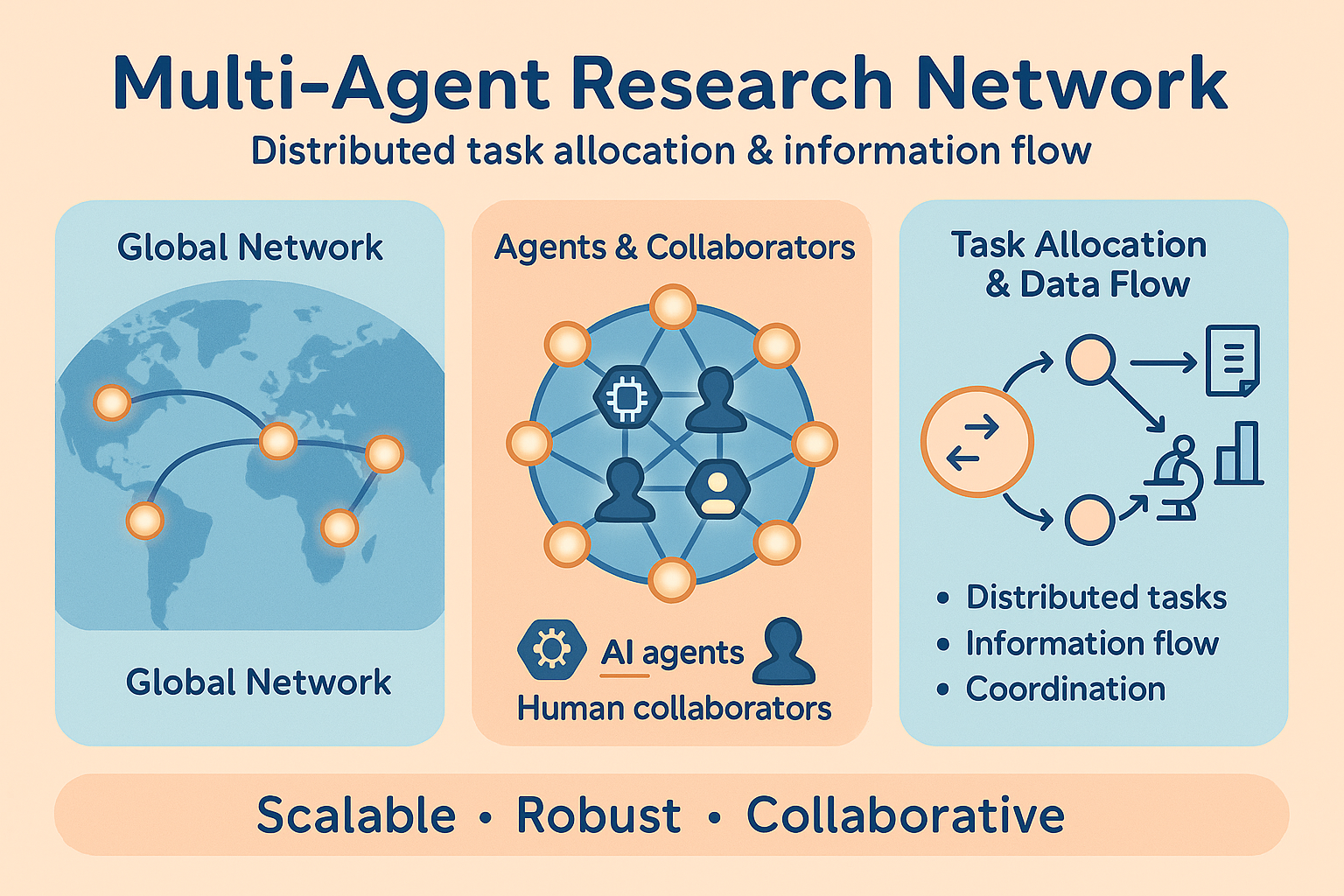

Figure 2: A conceptual multi‑agent research network. Glowing nodes represent AI agents and human collaborators connected across a global network, illustrating distributed task allocation and information flow.

Key Features:

- Multi‑agent collaboration: tasks are distributed among specialized agents that mirror steps of the scientific method—generation, reflection, ranking and evolution. Automated feedback loops enable agents to iteratively improve hypotheses and proposals.

- Supervisor orchestration: a supervisor agent parses high‑level goals into a research plan, assigns specialized agents to the worker queue and allocates compute resources. This orchestration scales to complex projects and adapts to evolving requirements.

- Human–agent interaction: scientists interact with the system by providing seed ideas, guidance and feedback. The system, in turn, uses web search and domain‑specific tools to enhance the grounding and quality of generated hypotheses.

- Cross‑hierarchical integration: by transforming every researcher into a “human–AI node,” the institute collapses traditional hierarchies. Undergraduate students, graduate students, postdocs and faculty all interface with their AI assistants, enabling seamless collaboration across levels.

- Information flow and continuous learning: agentic networks operate around the clock, allowing continuous progress and rapid dissemination of insights. Feedback from experiments and literature reviews flows back into the task graph, creating a data‑driven closed loop that accelerates discovery.

Future Directions:

The AI‑Enhanced Institute will explore the integration of multi‑agent frameworks like Claude’s MCP and Google’s A2A protocols. It will also develop secure and reliable AI‑alignment techniques, explore decentralized task markets and build a global Agent Enhanced Internet for research collaboration. Such infrastructure will position the university at the forefront of AI‑driven scientific innovation.

Part 3: AI‑Augmented Education — Integrating Teaching and Research

Our Objective:

Education is not separate from research; rather, it is the training ground for future innovators. To prepare students for an AI‑first world, we advocate a task‑driven, AI‑augmented learning model. In this model, principal investigators and senior researchers publish simplified modules of their research projects on the AutoLab platform. Students choose tasks aligned with their interests, and AI tutors generate customized background materials, prerequisite knowledge maps and tailored learning paths. The aim is to enable students to “learn by doing,” acquiring scientific thinking through real research problems while being supported by AI guidance.

Figure 3: A student interacts with a friendly holographic AI tutor via a tablet. The AI provides personalized guidance and enables continuous, 24/7 learning support.

Key Initiatives:

- 24/7 AI tutors: AI tutors offer round‑the‑clock support, answering questions, guiding problem‑solving and inspiring curiosity. They adapt to each student’s pace and learning style, ensuring no one is left behind.

- Interactive simulations and personalized paths: AI tutors use interactive simulations to make abstract STEM concepts tangible, and they create custom learning paths that cater to individual interests and strengths.

- Learning by doing: students tackle real or highly realistic research tasks under the guidance of AI tutors and human mentors. Their outputs—code, experiments and analyses—are recorded and assessed, with outstanding results feeding back into active research projects.

- Integrated evaluation: periodic assessments, including closed‑book exams without AI assistance, ensure that students genuinely master the underlying knowledge. AI helps identify knowledge gaps and provides targeted remediation.

- Human focus on mentorship: by offloading repetitive instruction to AI tutors, educators can devote more time to high‑level guidance, critical feedback and fostering creativity. This paradigm encourages students to develop scientific intuition alongside practical skills.

Programmatic Steps:

- Launch AI literacy courses: make AI understanding a core requirement for all students, exploring both fundamental concepts and hands‑on applications. These courses incorporate AI tools directly into assignments to demonstrate how AI complements human thinking.

- Establish AI+X innovation communities: organize hackathons, seminars and mini‑projects that bring together students from diverse disciplines. Students use AI to explore creative intersections—such as AI + biology or AI + art—and present their findings.

- Build the “Science Brain” project: aggregate data from educational tasks and research experiments into a shared knowledge base. Scientists design the top‑level architecture, while students conduct experiments and analyses under AI guidance. The resulting ecosystem fosters a pipeline of talent and accelerates institutional learning.

Part 4: Toward a Human‑Centred, AI‑Driven Future

Our vision goes beyond adopting cutting‑edge models; it seeks to reshape how science and education are organized. By intertwining human insight with agentic AI, we aim to create a 24‑hour, distributed research ecosystem that spans undergraduates to principal investigators. This paradigm shift promises to elevate human creativity, improve information flow and open new avenues for scientific discovery. Through careful protocol design, multi‑agent collaboration and AI‑augmented education, we are laying the foundation for a future in which human and machine intelligence co‑evolve for the benefit of both science and society.